Combining Transforms into Pipelines

PADL provides functional operators, which allow to combine Transforms to create powerful Pipeline instances.

This is most useful for building deep learning pipelines on a macro-level - for instance combining different preprocessing steps and augmentation with a model forward pass. You can keep building the individual sub-components as you’re used to - with python and PyTorch.

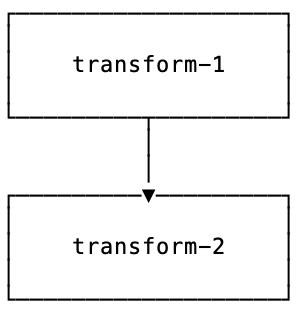

Compose >>

Transforms can be composed using >>:

Pipelines arising from a compose process their input in a sequence: The output of the first Transform in a composition becomes the input of the second and so on.

Thus:

>>> (t1 >> t2 >> t3)(x) == t3(t2(t1(x)))

True

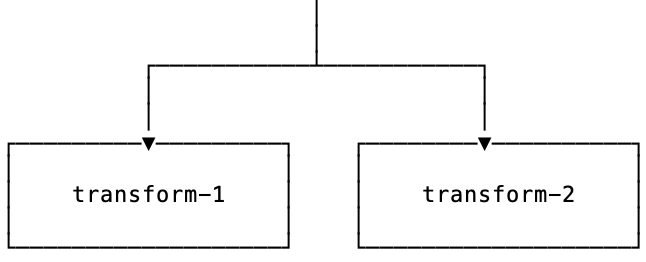

Rollout +

An input can be rolled out to multiple Transforms using +. This means applying different Transforms to the same input. The result is a tuple.

Thus:

>>> (t1 + t2 + t3)(x) == (t1(x), t2(x), t3(x))

True

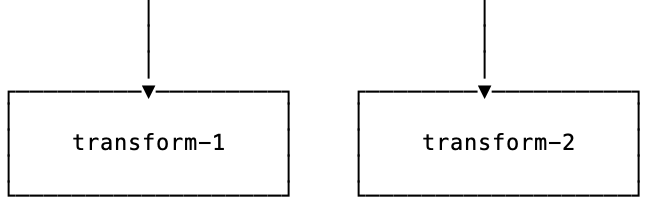

Parallel /

Multiple Transforms can be applied in parallel to multiple inputs using /. The input must be a tuple and the nth Transform is applied to the nth item in the tuple.

Thus:

>>> (t1 / t2 / t3)((x, y, z)) == (t1(x), t2(y), t3(z))

True

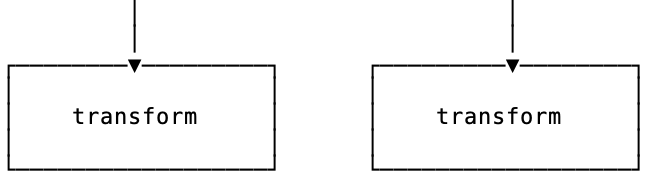

Map ~

Transforms can be mapped using ~. Mapping applies the same Transform to multiple inputs. The output has the same length as the input.

Thus:

>>> (~t)([x, y, z]) == [t(x), t(y), t(z)]

True

Grouping Transforms

By default, Pipelines, such as rollouts and parallels, are flattened. This means that even if you use parentheses to group them, the output will be a flat tuple:

>>> (t1 + (t2 + t3))(x) == ((t1 + t2) + t3)(x) == (t1 + t2 + t3)(x) == (t1(x), t2(x), t2(x))

True

To group them, use padl.group():

>>> from padl import group

>>> (t1 + group(t2 + t3))(x) == (t1(x), (t2(x), t3(x)))

True

Continue in the next section to learn how to combine pre-processing, forward pass and post-processing in a single Transform.

Examples

Compose

Building pre-processing pipelines

Use composition to build pre-processing pipelines - exactly as you would using torchvision.transforms.Compose:

from padl import transform, IfTrain

from torchvision import transforms as tvt

from PIL import Image

tvt = transform(tvt)

preprocess_image = (

transform(lambda path: Image.open(path)) # load an image from a path

>> tvt.Resize((244, 244)) # resize the image

>> IfTrain(tvt.RandomRotation(100)) # some augmentation

>> tvt.PILToTensor()

)

This uses

transform()to convert a lambda function and everything from the torchvision.transforms module into a TransformIfTrainto conditionally execute a step only during training

Combining pre-processing, model forward pass and post-processing

You can use composition to combine pre-processing, model forward pass and post-processing in one transform using the special Transforms batch and unbatch:

my_classifier_transform = (

load_image # preprocessing ...

>> transforms.ToTensor() #

>> batch # ... stage

>> models.resnet18() # forward

>> unbatch # postprocessing ...

>> classify # ... stage

)

For more details, head over to the next section.

Rollout

Extracting items from a dictionary

One common use case for the rollout is to extract different elements from a dictionary.

>>> from padl import same

>>> extract = (same['foo'] + same['bar'])

>>> extract({'foo': 1, 'baz': 2, 'bar': 3})

(1, 3)

This uses

the “same” utility for getting items

Generating different versions of an image

In a preprocessing pipeline one could repeat a transform instance to generate multiple views of the same image:

from padl import transform, IfTrain

from torchvision import transforms as tvt

from PIL import Image

tvt = transform(tvt)

preprocess_image = (

transform(lambda path: Image.open(path)) # load an image from a path

>> (tvt.RandomResizedCrop(244)

+ tvt.RandomResizedCrop(244)

+ tvt.RandomResizedCrop(244)) # generate three different crops

)

This generates three different crops of the same image.

Parallel

Pass training samples

Use parallel to pass training datapoints (input, target) through the same pipeline:

model_pass = (

preprocess # some preprocessing steps

>> batch # move to "forward" stage

>> model # apply PyTorch model

)

training_pipeline = (

model_pass / batch

>> loss # a loss function taking a tuple (*prediction*, *target*)

)

This uses

batchto move between stages.

Map

Example: convert multiple images to tensors

To continue the above example, one could use map to convert

all resulting PIL Images to tensors.

from padl import transform, IfTrain

from torchvision import transforms as tvt

from PIL import Image

tvt = transform(tvt)

preprocess_image = (

transform(lambda path: Image.open(path)) # load an image from a path

>> (tvt.RandomResizedCrop(244)

+ tvt.RandomResizedCrop(244)

+ tvt.RandomResizedCrop(244)) # generate three different crops

>> ~ tvt.PILToTensor()

)

This Transform takes an image path and returns a tuple of tensors.